IEX 170: Complexity and Its Discontents

What happens when complexity keeps increasing, and trust keeps declining?

Good morning, despite my best efforts, this week was washed away in the tidal wave of work that came my way. Normal service will be resumed next week. Here’s this week’s take on innovation and dealing with complexity.

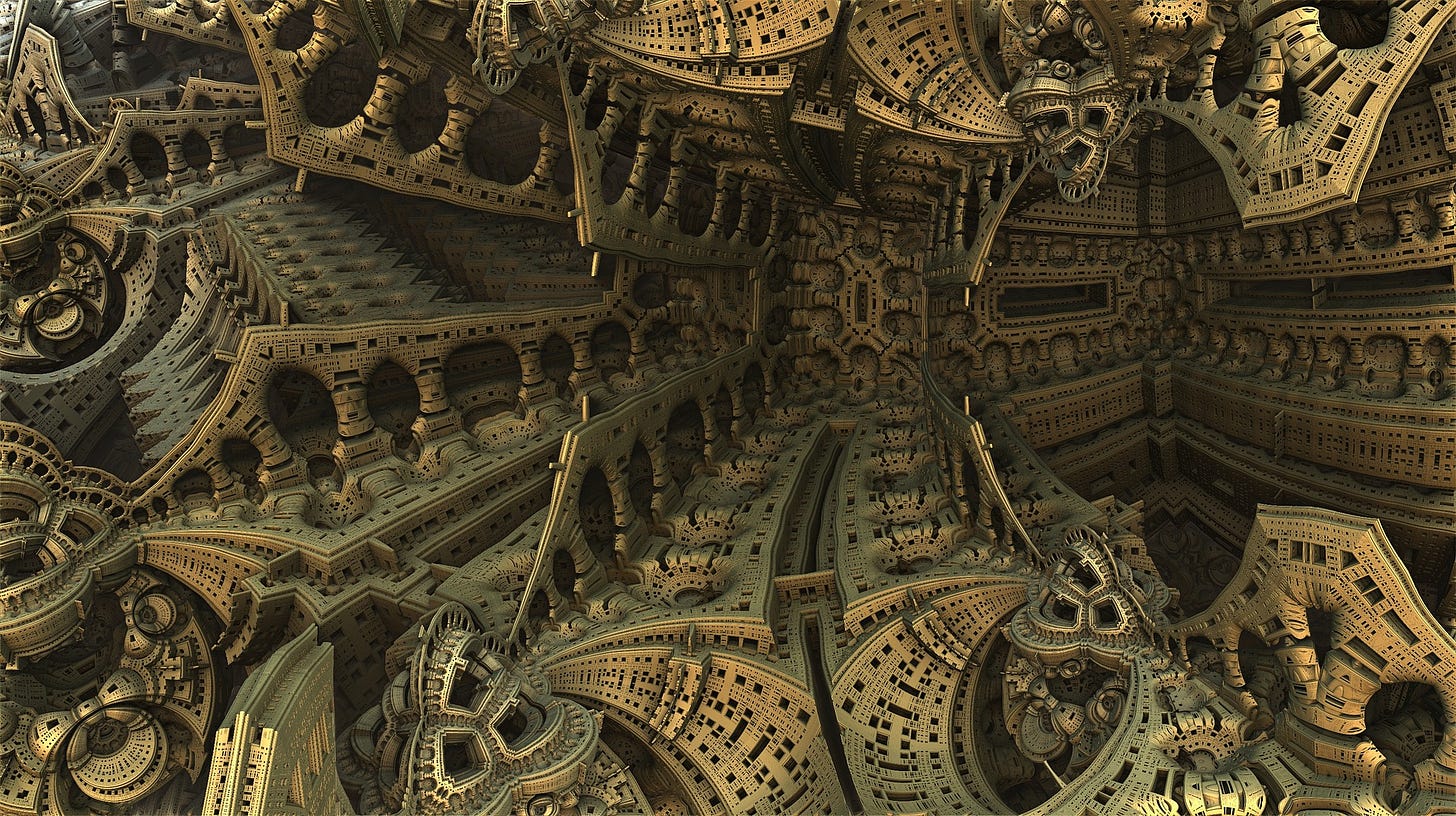

What is your strategy for dealing with head-exploding complexity? If you open up your browser and look at a smattering of news and information, you can read about gene-level vaccines, space debris, the impact of the metaverse on transportation, global semiconductor shortages and why we can't put more than a trillion microchips on a wafer, or about quantum computing, or crypto-currencies and NFTs, or you can try and decipher cancel culture, the rise of nationalism, or ponder the significant extension of life expectancy that's on the cards. It's a lot to take in. The world is complex. And by the way, this is not new. One of the most insightful books I've read - Breaking Smart - argues that the world has always moved from less to more complex. The reverse has never been true, so the feeling of being overwhelmed by complexity is as old as civilisation.

We are surrounded by natural complexity - the origin of the universe, the behaviour of time, gravity, and light, or the inner wiring and working of the human brain - these are all areas which have puzzled and fascinated scholars for centuries. The overlay of human complexity - through social patterns, technology evolution, or macro-economic models just add to the problem. The question I come back to therefore, is what are our coping strategies? We are directly impacted by the turning gears of the world we live in, and history suggests that some typical response patterns have evolved.

Re-organisation is a typical response. GE is restructuring its business as we speak. Following the experts is another one. In so many aspects of our lives we leave it to the experts - lawyers, accountants, financial advisers, doctors, to help us take decisions. After all the world as we know it is modelled on the Ricardian principle of comparative advantage. The challenge here is the balance of how much ground to cede to experts. From surgery to spritualism, and from blockchain to book-summaries, there are experts in every area. To what extent to should you hand over? Is disengagement an option? You can have a perfectly happy life without ever understanding how NFTs really work, but you probably should understand climate change and your own health. Only a few people have the intellectual stamina to get to the eye of the storm in a complex area, understand what's actually going on and exploit their knowledge. Think Musk, Jobs, Gates, or Bezos. Should all of us aspire for the same level of understanding? As a business dealing with complexity, should you restructure? Bring in experts, aka consultants? Try and get to the heart of the complexity?

Another particularly fascinating and even innovative response to complexity is the pattern of alternative hypotheses. We deal with complexity by constructing a completely new, and usually simpler, explanation for phenomena that satisfies us. Religion is a good example. Gods were initially envisioned to explain natural phenomena that we couldn't explain - such as lightning, rain, tides, and earthquakes. When we had scientific explanation for those, the Gods evolved to more abstract complexities of emotions and aspirations such as wealth, music, or war. Even today, organised religion plays an important role in society - by helping people make simple choices between good and bad, rather than having to invest intellect and emotion into ethically evaluating every experience. But a darker side of the alternative hypotheses pattern can be seen in religious fundamentalism and in conspiracy theories. 5G and Bill Gates conspiring to give you coronavirus, or climate change denial. These are just convenient but simpler stories that allow people to deal with the complexity that they can't handle. Of course, they are weaponised and often created by vested interests, but their lure lies in their relative simplicity. I remember a story about a friend's child who asked a teacher 'why does the sun rise in the east?' - to which she was told 'because God made it so'. It really doesn't get simpler than that. There are corporate versions of alternative hypotheses as well. They usually take the form of ill thought through slogans such as becoming more 'customer centric' or 'fixing the basics first'. In 2017 Facebook set up a language bot system where 2 bots would negotiate with each other. The experiment was turned off after some time. The engineers had not put any constraints on the language quality, and over time the language of the bots degenerated. But in an excellent example of the alternative hypotheses behaviour, a number of articles were published suggesting that the bots had invented a new language, and the experiment was shut down because it had gotten too creepy.

Today we're at the cusp of another interesting approach - outsourcing complexity to the machine. Even if we don't understand the complex interplay of cause and effect between thousands of variables, the machine (or some version of AI) will make sense of it. Does it matter that we don't understand exactly how the AI works? Well we can easily see how this can cause consternation. When computers play 'chess' or 'Go' they don't play like humans. Or when an AI classifies risk. In a classic example of fighting fire with fire, in these examples we've solved a complex problem by using another complexity which we don't really understand. This is why XAI (explainable AI) is such a big theme today.

In these examples, we need to distinguish between trust and explainability. Just as we do when we go to an expert, we trust the expertise rather than understand the problem ourselves, so too with AI - we need to trust the provider. When you get onto a plane, you may not know exactly how it operates but you trust the pilot, the airline, and the aviation system. And you trust that there are people who know exactly what's going on. The scenario we should all look to avoid is when an AI is performing a job and nobody knows how it's doing it. But just because you and I don’t understand it, it doesn’t mean it’s inexplicable.

The challenge seems to be that we are reaching a tipping point of complexity and trust with everything around us. The combination of increasing complexity and decreasing trust is a potentially hazardous scenario - leading to conspiracies, half truths, false theories of risk compensation, and fake experts. Citizen emptor!

Reading This Week

Electric Vehicles: Who are the world’s top EV battery makers? ... and who are the world’s leading EV Makers? (Visual Capitalist)

Healthcare: Understanding the brain and chronic pain - new options for treatment (NYT)

Identity Systems: Advantages and disadvantages of Aadhar - the world’s biggest ID system. (FT)

Climate Change: Winners and losers of climate change programs. (Fast Company)

Data Visualisation: The Internet minute in 2021 (Visual Capitalist)

Teleportation: The simple science of why it can’t work. (Wired)

Metaverse: Seoul becomes the first city government to join the metaverse. (Quartz)

Covid Detector: A product that checks for the virus in the air, in your room. (Fast Company)

AI Application: A language AI that adapts accents as you speak with it. (IEEE)

IOT Report: Hot off the press, a McKinsey report published in November, about the growing opportunities in the world of IoT.