#244: Automating Decisions with AI

Model driven rather than rule driven automation for decision making will reshape how AI helps drive decision-making in organisations .

Good morning. It seems like the AI revolution is well and truly upon us. While a lot of discussions are playing out around AI strategies, setting up AI offices, and identifying AI initiatives, has always been my understanding that the the core value of AI is it’s ability to automate decision making. I’ve written about how we select complex decisions for AI earlier. But today I want to take a closer look the business cycles and the categories of decisions we make, and how AI applies to them.

This literature summary about AI and the decision making process, which looks at decision making across 3 sectors (healthcare, finance, tech) concludes that the adoption of AI is a given, and the focus should be on how, not whether to adopt AI for decisions. Only 2 papers have negative conclusions, compared to the 78 positive and 38 neutral papers.

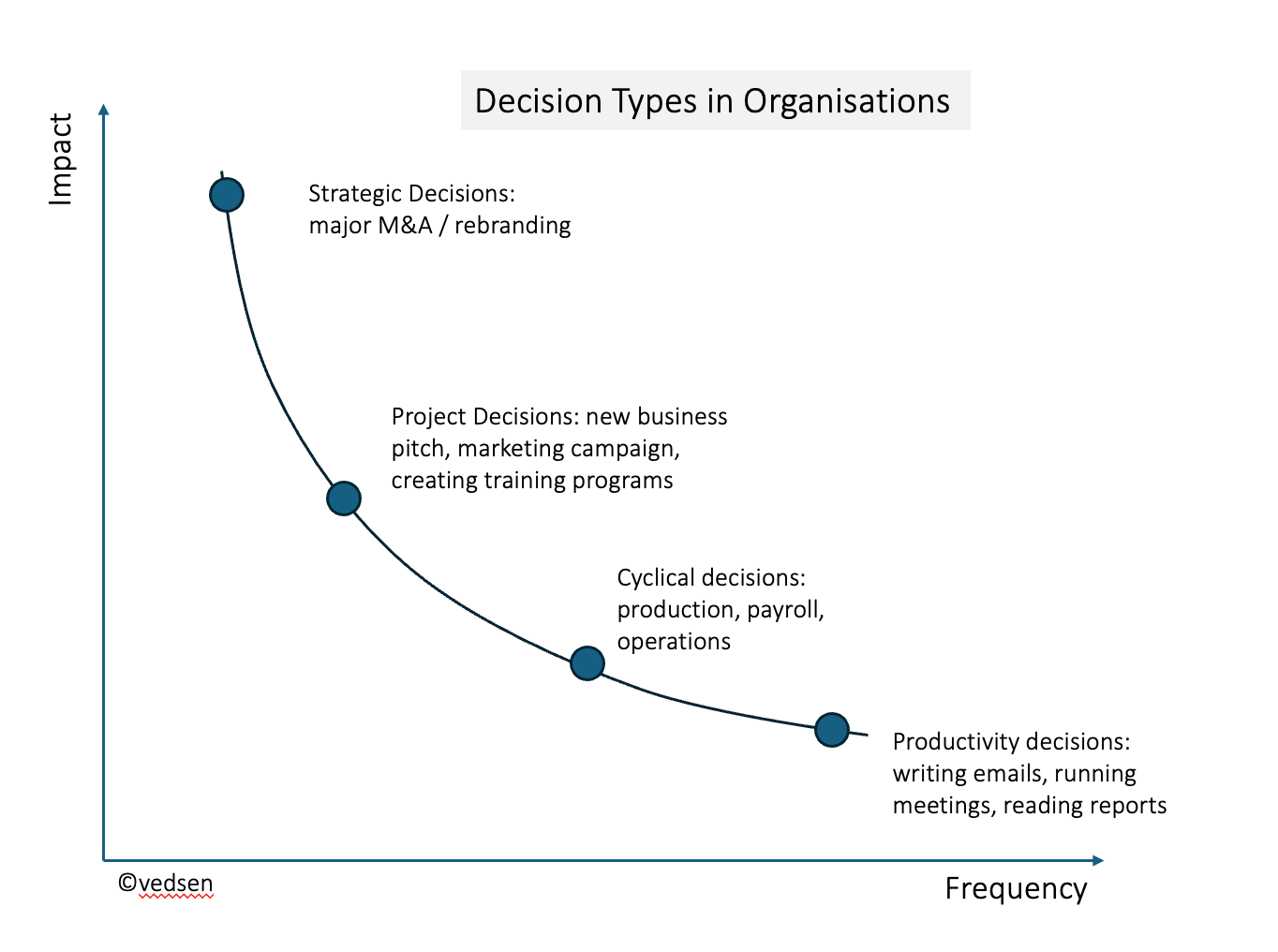

But first, let’s unpick management decision making in organisations and distinguish between 4 types of decisions, just for the convenience of this analysis.

Types of Decisions

Strategic Decisions: These are decisions we make infrequently. Each one has a significant impact, they are often unique in their framing, the choice set, the complexities involved, and the difficulty of a decision. For example for an individual, which undergraduate university to join is a strategic decision you will most likely make once in your lifetime, based on your aspirations, self awareness, skills, academic performance, your world view, the knowledge you have about the universities, and so on. For a company, significant choices about getting into a new line of business, creating a new brand, or a merger, are strategic decisions, as are restructuring, spinoffs, or even creating an organisational strategy - something we do once every 12-18 months at best. Often we learn from the experiences of others. Characteristics: low frequency, high impact, high complexity, no homegrown playbook.

Project Decisions: these are typically decisions that you make more regularly enough to have a ‘playbook’ for. While often complex, you have past experience to fall back on. Planning holidays is a good personal example. For an organisation, say a retailer, opening a new store is a complex project (a large retailer has probably done it enough times to have a manual about all the steps involved and the challenges). Projects tend to have high salience - each new store you open may have unique challenges, but there is knowledge base to work off. For a company that does acquisitions regularly, a new acquisition is a project, rather than a strategic decision. Other decisions which we can think of as projects include marketing campaigns, running graduate recruitment programs, building new software or implementing a new off the shelf software, CSR programs, appointing suppliers, and so on. Each of these will have a number of decisions to be made within the project. Characteristics: regular occurrence, medium to high impact, reasonable complexity, usually follows an implicit or explicit playbook and should have defined best practices.

Cyclical Decisions: these are well drilled routines you go through very regularly. The choice set is often quite narrow, and the options are well known, and the choice criteria is also very well established. Dropping kids to school, or your daily commute are examples where you make these kinds of cyclic choices. You may have a second route to take if your preferred route is unavailable for any reason, but the chances are you know what your option B is and you now seamlessly switch to it as needed. A hospital nurse’s daily routine involves many cyclical decisions, for example - each patient based on their needs has a set of activities to be performed and also depending on their health, a nurse (or doctor) may have to make daily choices about their care. Ironically a fast food chain worker may have very similar overlapping cycles of decisions. A hospital can be very complex, or appear chaotic, such as in an emergency department buffeted by a constant stream of new and urgent cases. But they are (or should be) just a series of overlapping cycles. For a hospital nurse, each individual case will go through a standard set of tests, choices, and decisions. Typical cyclic decisions in enterprises include production runs, payroll, and all your regular business operations - operating flights for airlines, or intra-bank settlements for high street banks, or delivering parcels for the Royal Mail. Characteristics: high frequency, variable impact (nurses vs fast food chains), limited options, well defined process.

Productivity Decisions: These are the everyday choices we make which aren’t captured in well managed cycles, but impact our productivity and daily lives and work. Personally, it might be your choice of what to wear to work, or whether or not to cook or eat out. In organisations a common example is agreeing meeting times by exchanging a series of emails about available slots. Characteristics: high frequency, no process, low individual impact.

When we think of decision automation and AI - which of these kinds of decisions do you think we’re talking about? Where should AI focus?

Cyclical decisions have historically been the easiest to automate. The limited set of choices at each stage and the repetitive nature of decisions makes it both easy and valuable to automate. In fact for a lot of such environments, we have not needed AI to get to a reasonably high level of automation. Although it has to be said that there’s a lot of AI going into improve the quality of cyclical decisions to drive to beyond human capabilities - whether it’s retailers predicting stockouts, or banks driving better risk management.

The Nurse’s job involves more complex cycles, whereas the cycles for a fast food worker or a time sheet and payroll cycle is relatively more straightforward. Traditional automation has relied on the tight scripting, limited choices, and well defined step by step nature. But when we try to apply this to more complex environments - things tend to get out of hand. For example anybody who’s been subject to a contact centre person having to follow a script knows how frustrating that can be. As humans when we make more complex decisions we usually do so with some kind of mental model of the inputs, options, implications, and risks of these decisions. Let’s call this a model based decision.

So we need to distinguish between traditional (rule based) and intelligent (model based) automation when speaking about decision automation through AI. And we need to step outside the world of tightly defined cyclical decisions. into the world of project and productivity decisions. This is where much of middle management lives. Let’s keep strategic decisions aside simply because of their infrequent occurrence (although AI can definitely help with the data, scenarios, and trends to help with strategy formulation), and focus on projects - this is arguably one of the more inefficient aspects of organisational work and decision making. I don’t mean projects that are actually defined formally as projects - these tend to get more structured treatment - but all the other work that’s carried on which should have project management discipline but doesn’t.

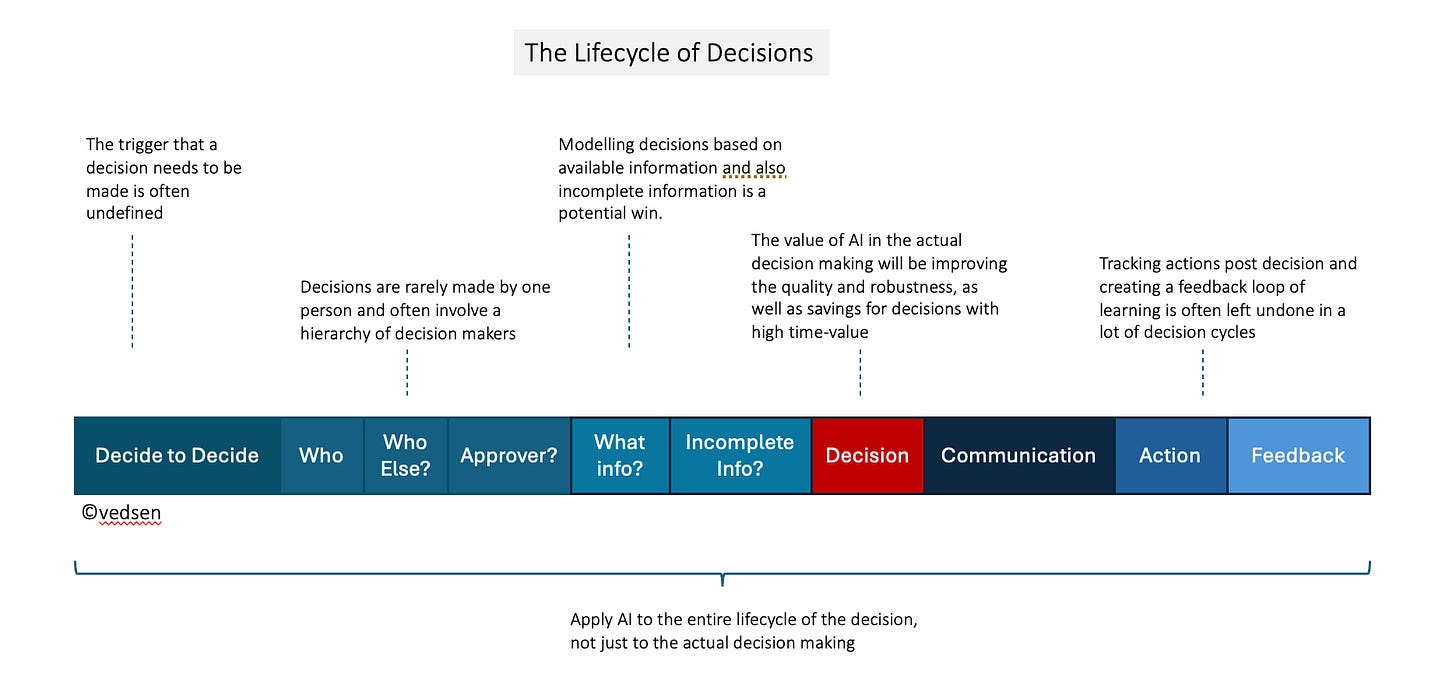

When I think about project decisions we make at work, I see a complex series of events before and after any actual decision which illustrates why this is harder than a simple automation. Let’s say you’re planning a customer experience improvement workshop, and a key early decision is agreeing an agenda.

Lifecycle of Decisions

Decide to decide: Often, the first challenge is the recognition that a decision needs to be made. I’m sure you’ve been in meetings, which seem to go on for ever and yet nothing is decided. Our first decision is actually calling for a decision on a topic. I see many projects suffer because key choices were not made at the right time. You’re workshop project has had 6 meetings but despite many discussions and inputs, there is no final agenda yet.

Whose Call? Often it’s unclear as to who needs to make the decision. This should be blindingly obvious but I’m always surprised at the frequency with which the lack of clarity around this pops up. You haven’t actually identified an owner for your workshop agenda so everybody feels comfortable sharing their views but nobody is on the hook for taking the call. How is the decision made - is it a consensus? A vote? A single person’s judgement? Is this clear to everybody?

Who needs to agree with the decision? Are they all in the conversation? If not, it means further discussions are required. Does your workshop agenda need to be reviewed by the attendees? Are there key stakeholders? Do you know who this list needs to include?

Who needs to approve? A common pattern is for people to spend a lot of time debating and deciding something only for a senior person to provide a completely different direction. You finally get to an agenda but your boss feels that the focus of the workshop should be different. And who knows, her boss might have yet another view. I think in a lot of organisation managers prefer their teams to do a lot of the thinking and come back with options they can choose from, and their own thinking evolves over time. This is natural but highly inefficient. We need to shift left on decision making.

What information needs to be made available for the decision? This is a big one. Historically a lot of decisions are based on gut, or the judgement of an individual. But ideally they should be objectively done with clarity about parameters. Do you know what information you need to decide the right agenda for your workshop? Or is it somebody’s intuition?

Incomplete Info: Even if you do know the required information set, what if it’s incomplete? So many decisions have to be made with incomplete information. Let’s suppose your workshop was to drive the future customer journeys for your business, but you don’t have the data that’s due from a piece of research. Should you wait and delay the decision or could you decide without the data?

Communicating the decision - once you’ve made a decision, and it has been rubber stamped, it needs to be clearly articulated and communicated to all the right people - or rather, all the affected people. Communicating decisions may or may not come with the reasons. Hard decisions sometimes do but not every decision needs a detailed reasoning. Now that your agenda is fixed, all attendees, speakers, facilitators and support teams need to be given the final agenda so they know their timings and cues.

Implementing the Decision: Now we get to the impact of the decision. Consummating the decision - with follow through and actions. It’s no good making a decision it doesn’t get implemented. So your agenda now perhaps needs to lead to a plan for each session from each of your facilitators, with clear timelines and dates.

Feedback and improvement: Every decision only looks good or bad in retrospect - but they can only get better over time, if there is appropriate feedback, validation, and an effort to improve the quality of decision making. This is often a black hole in many organisation and as a consequence, the same mistakes get perpetrated, and there is little improvement. Will your next meeting, or workshop, or agenda creation exercise be any better?

Every decision also needs to be classified by the level of risk, the number of options to be considered, previous data available, the level at which the decision is being made, so we can further classify decisions, but for now, let’s just look at that sequence above.

Where can AI help here? And as importantly, what should we do differently to improve our decision making with AI? A lot of the thinking around AI tends to focus somewhere around steps 5 and 6 and before 7, when the actual decision gets made. But I think that misses the trick of how the entire process could be improved. Here are 5 ways in which AI can be used to make decision making more effective:

Build the project playbook and identify decision points and patterns: unlike cycles, most organisations don’t have ready playbooks and defined steps for projects. There are too many projects and the variety is too wide. From implementing an IT system, to setting up a new office, to creating a new training program - each of these are projects. And at any given point there are hundreds of such projects in play in any organisation, with varying sizes, complexities, and patterns. An AI engine can potentially create a model of a project with key decision points identified, and effectively creating a playbook. One of the many challenges of project decisions is that while they recur across the organisation, the personnel are often different for each one, so no institutional learning or standard practice evolves. Of course there is plenty of formalisation around projects that form core business - such as software development projects for technology firms, or campaign management for marketing consultancy. But 80% of project decisions tend to fall outside of this core.

Since we do a lot of our project planning via teams, I just asked Microsoft CoPilot to build me a typical framework for Innovation Workshop planning at the Pace Port. CoPilot analysed 54 workshops we’ve run (with multiple clients, internal teams and stakeholders), and came up with an initial plan. I asked it to put it into a timeline which it did, and offered to create an excel based planner. The planner was far too basic, but I’ve only spent 5 mins on this as an illustration of this point. I’m definitely going to treat it as a collaborator to drive a much higher level of planning and modelling.Ensuring feedback and improvement: I was impressed that one of the things CoPilot included in its 6 week workshop model was post workshop review and feedback. All too often, once a project is completed, people forget to do the retro or the feedback session and it all gets lost. An AI engine can be trained to capture the feedback from the project and compare against an ideal plan. Was the agenda created too late? Were the stakeholders not communicated with adequately? Did something get missed from the list of things to be done?

Creating a communication plan (and other time saving ideas): this is a simple time and effort saving device. It’s one of those things that sometimes suffers because everybody is focused on the more immediately critical aspects, but we know that executing a clear communication plan for stakeholders at various stages of the project can turn out to be a make or break part of your initiative. This is probably one of many labour saving areas of AI which can be applied to a project. An alert style update for all stakeholders when decisions are made about a project is a simple way of keeping everybody in the loop.

Modelling and making the actual decision: We finally come to the core of the argument - the actual decision making. Needless to say an AI engine can be trained in a domain to help model decisions and make them better. I want to highlight the modelling over the actual decision making, because at least for the foreseeable future (next 3-5 years) we may see a need for a human-the-loop for any significant project, especially if the downside risk is high, or accountability is an issue. So more than making the decision, the AI will actually identify the data required for this decision, and if the data is available, actually gather the data and make a recommendation so that the human owner of the decision can approve/ decline/ query/ modify the decision.

We have recently built an agentic system for major incident management for a client. When your mission-critical systems go down and every minute costs you thousands of Pounds/ Dollars in lost business, you want to accelerate the decision making. In this case it wasn’t the case that there was no process, but that AI agents could run through the multi-thread process much faster and significantly reduce the problem resolution time.

This also highlights the different types of benefits AI can drive into decision making.

Decisions with time value implications can be made faster

Decisions with complex requirements can be made more robustly - AI tools can expand the range of options and identify more factors than humans.

Human time and effort can be saved across the cycle of the decision

Learnings can be accrued across projects so that best practices can evolve for organisational capability building

And you can reduce risk, costs, drive consistency, and enable productivity and so on, as this piece summarises well.

Human Shortcomings

Lest we forget, in all of this, there are some obvious challenges in the current model of human decision making. Broadly this breaks up into:

The limited ability we have to process complex data in short amounts of time. If you’re running a large construction project for example, and you have to make a decision involving a sudden weather disruption, you will struggle to take into account all the dependencies in a multi-tiered supplier and workstream environment.

Our biases, built out of our experiences. They say a general is always fighting the previous war. Our experience can come up short when the challenges we face are completely new. And of course our own cognitive biases are by definition invisible to ourselves. AI can have biases as well, but remember AI biases are potentially easier to identify and fix than human ones.

The impact of the risk, which can often stymie the decision itself - sometimes people struggle to make decisions because of the potential risk and downside of the decision, thereby delaying the process.

The levels of coordination required and the communication overload. Complex stakeholder environments often slow down decisions and their subsequent ratification and follow through.

The most important thing here is that the way we go about this is not like traditional automation. We are essentially starting from unstructured environments so the initial modelling and organising of the environment requires the intellectual heavy lifting. Identifying the structured and unstructured data (such as Teams transcripts) for the AI to learn from and act on is the second area of effort. And getting people to work differently with these AI tools is a third big part of any such AI program. Remember in some of these example we may not just be replicating the current ways of working, even though that can be an expedient starting point.

The Future: Intelligent Choice Architectures

An underlying and emergent area of thinking is the use of Intelligent Choice Architectures (ICA). This builds on Thaler and Sunsteins work on Choice Architectures. ICA looks at the world of constantly evolving choices, and helps to build more context aware decision environments. The real value of AI here is actually giving a decision maker a better set of choices. Better could be a wider set of options in some cases, or a narrower one in others (when the original set is too wide). It could also adapt the stakeholder map as the decision is made and evolved. As we go down this path it could even require leaders to rethink how they lead - if AI gets really good at framing and making decisions - this cultural shift may be the biggest challenge for adopting AI in decision making.

AI Reading

The AI powered Cyberattack Era: poor language, multimodal prompt injection, exploiting AI browsers - just some of the ways in which AI systems are being hacked (Computerworld)

Agentic AI: A CISO’s security nightmare in the making? New risks include multi stage attacks, unwarranted data sharing, lack of visibility, rewarding evasion, supply chain, shadow AI, and more.(CSO)

Don’t let hype about AI agents get ahead of reality “There’s also the assumption that agents are naturally cooperative. That may hold inside Google or another single company’s ecosystem, but in the real world, agents will represent different vendors, customers, or even competitors. For example, if my travel planning agent is requesting price quotes from your airline booking agent, and your agent is incentivized to favor certain airlines, my agent might not be able to get me the best or least expensive itinerary. Without some way to align incentives through contracts, payments, or game-theoretic mechanisms, expecting seamless collaboration may be wishful thinking.” (MIT)

The AI Revolution Won’t Happen Overnight : “next up: Multimodal AI and Compound AI systems—technologies that process multiple types of input and work together like human cognition. A self-driving car doesn’t rely on a single data source; it integrates LiDAR, radar, GPS, and live sensors to navigate. AI will need to do the same, layering models that analyze vision, sound, text, and real-time data.” (HBR)

AI in Education: This excellent edition of the NYT Hard Fork Podcast talks through 2 examples of AI in education - the Alpha School and a discussion with D. Graham Burnett, Historian of Science and Technology at Princeton University, who eloquently describes AI as an “…alien familiar ghost god monster child” (NYT)

Other Reading

Do we need humanoid robots? The research suggests that while humanoid robots are okay, special purpose robots may be a better answer than seeking to replicate human form. (IEEE Spectrum)

Updating our mental models of risk for environmental disasters: Our approach to risk is challenged because (1) psychologically, we tend to focus on tangible short term risks (say a forest fire), rather than more significant but longer term, more abstract risks (climate change), and (2) we treat risks as isolate events rather than interconected systems with potentially chain like events - such as a climate disaster that impacts the energy grid. This creates significant gaps in our risk models which is sometimes exacerbated by policy decisions. The article recommends updating our risk models based on a 4 part approach: (1) Hazard - understanding the interconnected risks such as impact of land use on climate risk (2) Vulnerability - including the perverse impact wealth may have on risk perception (3) Exposure - beyond the immediate impact, such as home insurance going up across the board because of the california fires, and (4) Response - which tends to focus on recovery from the immediate challenge - e.g. hurricanes, and less on strategic adaptation. (Issue.org)