#223: Safety - The Cyberphysical World

Technology creates huge opportunities for better safety management, but it also creates significantly more problems. Here's a look.

I had the privilege of presenting on the impact of technology on safety operations this week. It was way out of my comfort zone so I learnt a fair amount doing this as well.

Safety is critical to evolution. If we didn’t have a ‘fight or flight’ algorithm built deep into our genetic make up, we wouldn’t be here today. In complex industrial work, safety is an industry by itself and a well developed function. What’s new is the overlay of technology that adds new dimensions to the picture.

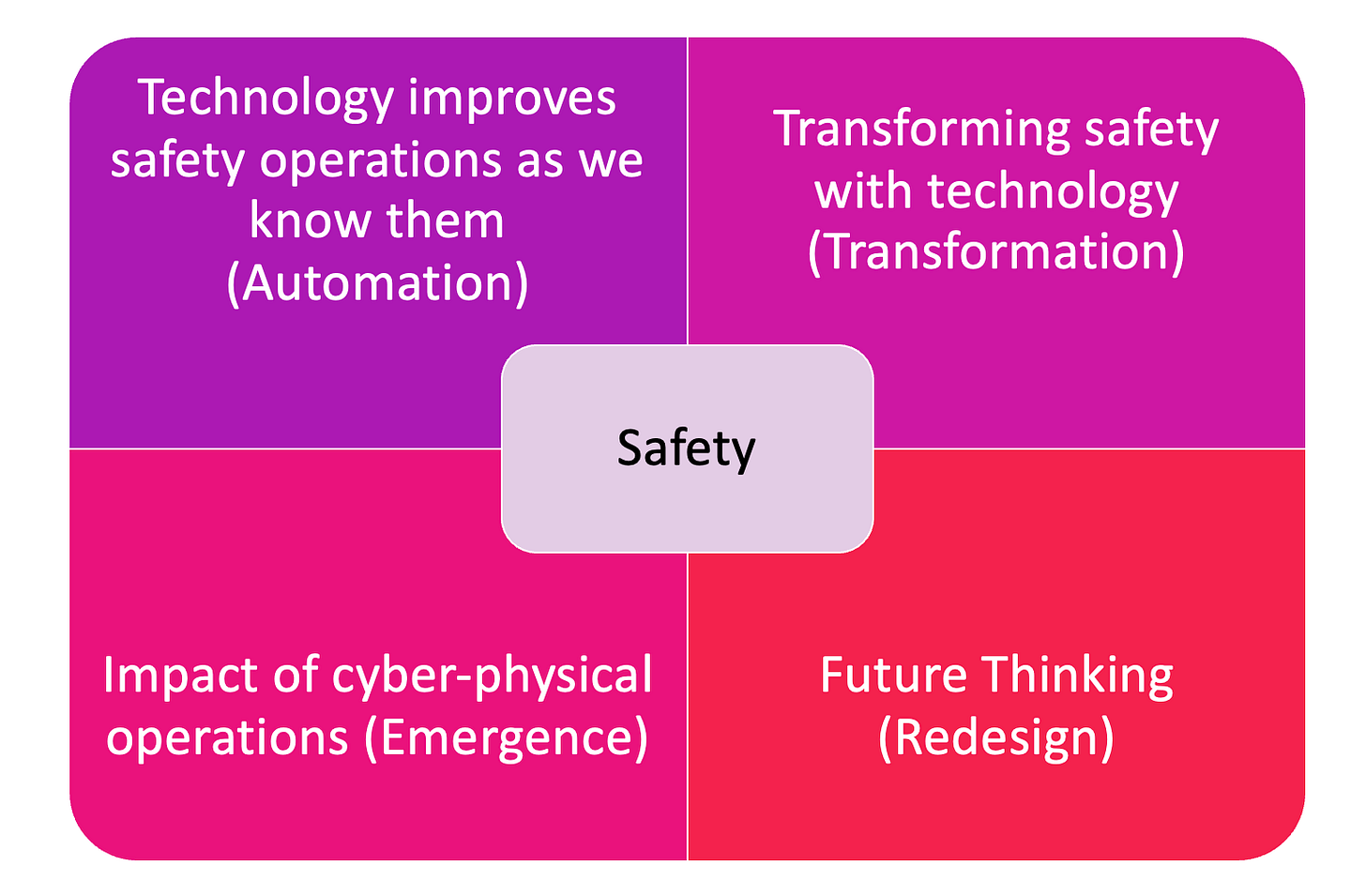

We can look at these 4 ways in which technology impacts safety operations.

Automation: improving the current operations by making them faster, more efficient.

Transformation: using technology to actually change the way we run safety operations

Emergence: the range of entirely new risks we have to deal with, especially with cyber-physical systems and the threat of cyber-attacks.

Redesign: based on our learning and use of technology, the opportunity to redesign the environments we work in for improved safety outcomes.

Any safety operation will tend to have a model like the one below. You scan continuously for risk, you prevent as much as possible, you build appropriate responses for incidents that do happen, you solve - i.e. do root-cause analyses, and based on the learnings, you redesign your safety environments.

Threat models: What are we scanning for?

When I listed all the possible threats to safety that I could think of, it seemed natural to club them into these 4 categories:

Environmental threats e.g. fire/ flood/ earthquake

Accidents: e.g. collisions / Injuries - usually equipment related but can also include falls

Long term impacts – e.g. repetitive injuries from working on machines, or lifting loads/ also illnesses

Human Attacks - e.g. acts of terrorism, protests, antisocial behaviours, including Cyber-attacks

Keep in mind that they’re not always distinct. Sometimes they cascade into each other and often, the damage is caused as much by the secondary impact as by the original incident.

Do all these have early warning signs? I suggest the short answer is yes, although we might not always have been good at picking them up. Sometimes the warning is just a few seconds, at other times the signal is too weak. As technology evolves, our ability to capture and respond to these weak or sudden signals improves significantly. This happens in 2 ways. First, our ability to pick up signals through sensory means is much significantly enhanced, and second, our ability to continuously analyse and identify the critical signals has also improved exponentially.

Hence my assertion that scanning is one area that has been transformed by technology. After all scanning is a sensory activity, and while we were earlier restricted to our own (very limited) sensory organs, we now have eyes, ears, noses, that can not only exist in thousands, but also be vastly distributed.

Think about a forest ranger having to walk around checking for potential fire hazards, relying on his own senses and experience, versus a swarm of drones that circle the same area, feeding their visual images into a system which scans for patterns that indicate likelihood of fires. The same logic can be applied to floods, or any natural disasters, or even conflict zones.

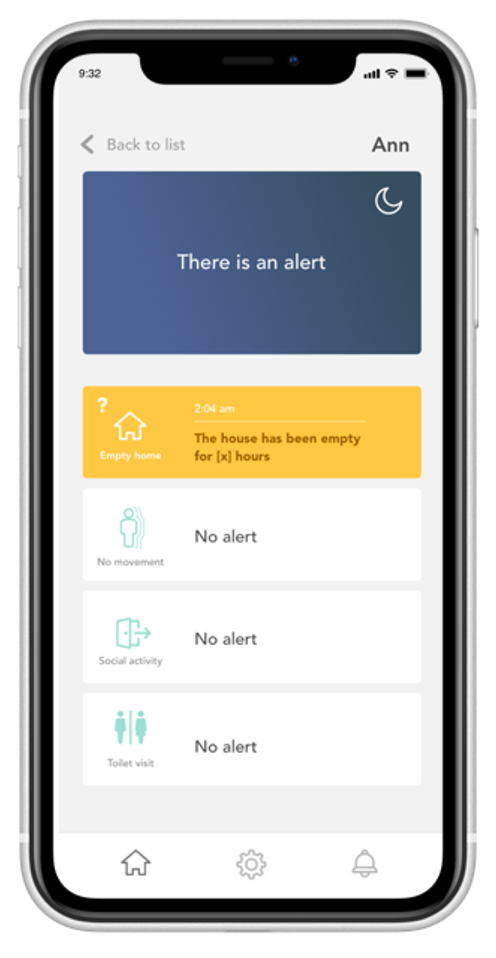

Drones and ccTV camers can capture visual cues, but tools like the eNose can pick up on olfactory inputs - such as hazardous or insanitary conditions, or perhaps distinguish between fresh and stale foods on a shelf, or a gas leak that is not discernible by humans. Simple contact sensors can tell you if a door is open or closed, and motion sensors can pick up the presence/ absence of people. We used technologies like this in our project to keep older people safe by deploying non-intrusive sensors in homes, and generating alerts for whenever the system identified a break in pattern.

AI In The Wild

The explosion of AI and the proliferation of Gen AI tools across the world means that almost everybody has access to extremely sophisticated tools. The would be teenage hacker in his or her bedroom now can access tools that can deepfake videos, create hyper-realistic messages that misinform or delude in order to gain access to your systems. This is effectively a continuation of the one-upmanship that has been the hallmark of cybersecurity, and espionage. Staying one step ahead is the name of the game.

Emergence: Cyberphysical Systems:

As more of our environment is controlled and managed with the help of technology, it opens up an entirely new category of risk. Cybersecurity is something we’ve all had to educate ourselves on, in our personal and professional lives. The London Olympics had over 200 million cyber attack attempts. The number more than doubled to 450m by the time the Tokyo Olympics came around. This time round, we can expect even more, especially with bad actors having access to sophisticated technology.

This becomes more critical when our energy grids, our cars, our hospitals, and our air control towers are all more dependent on technology based operations. It means the impact of cyber attacks can be even greater over time, especially keeping in mind that the modus operandi of a cyber attacker can be to infiltrate, and not just to decommission.

Emergence: New Risk Categories

The introduction of robots, drones, and autonomous machines adds a new dimension to safety. A moot question is whether autonomous vehicles make us more or less safe. (The trolley problem always comes up here but that’s a red herring, because it exists even without autonomous vehicles). Given the pace of change of technology, it seems likely that over time these systems will become much safer. But the transition period where humans and machines coexist in decision making can be quite tricky. The evolution of networks is another critical factor - this is like the nervous system that transmits sensory perception to the brain and decisions back out to the edges. Without a reliable communication channel, the response will be delayed and hence this carries high risk. For example, 5G networks can allow autonomous robots in warehouses to move at higher speeds while working alongside humans, because the network reliance allows them to stop in time in the case of possible collisions. This higher speed makes all the difference for the business case to stack up. Schipol has started trials of autonomous buses in the airport. On the other hand there are robots making work safer as well.

Response: A Digital Nervous System

Survival for any entity depends on an ability to deal with threats and danger quickly and effectively. Response depends on analysis and action. AI gives us analysis and decision making like nothing else before. There’s an interesting story about the Phoenix Memo: in July 2001, an FBI agent filed a report suggesting that there might be a plot to hijack planes and fly them into buildings, based on people of ‘investigative interest’ signing up for flying school The memo came to nothing because the FBI felt there wasn’t enough evidence. A month later another agent in Minneapolis asked for a warrant to search the laptop of a man called Moussaoui because he had signed up for flying school paying some $8000 in cash and asking many questions about cockpit doors. The warrant was not granted till too late ended up showing links to all the perpetrators of the 9/11 attack. It’s very hard to say whether any of this could have turned out otherwise. But at the very least if all this data went into a common ‘brain’ then these 2 pieces of information may have been joined up to create a stronger signal.

Another more accessible example is the healthcare application Syncrophi which I’ve written about before. It tracks health data from multiple sources in a hospital environment and creates a risk assessment for each patient. And then it creates a single prioritised task list for nurses to follow. This is a principle that can be applied to any complex environment where the risk situation changes constantly, as long as you have the data.

When it comes to action in a regular safety incident - often the need of the hour is first responders, and the use of drones to deliver first aid or defibrillators is now well documented. Geolocation capabilities and situational analysis also play a big part.

Prevention: Enter Digital Twins

My futurist colleagues all say the same thing - you can’t predict the future, but you can rehearse it. What they mean is if you can create scenarios via the potential combinatorial future states, you can plan for eventualities and practice responses for each, so you’re more likely to be prepared. Digital Twins are increasingly being used to ‘rehearse the future’. Rolls Royce, tracks aircraft engines and predicts their potential failure modes to ensure they can prevent them from occurring. TCS has recently created a digital twin of an athlete’s heart, but you can apply it to a store, a supply chain, or a warehouse, to rehearse those failure scenarios.

Redesign Is Key

When I wrote my book on digital, the central pillar was connect-quantify-optimise. No rocket science there. Once you’ve connected your sensors, identified a problem or pattern, and solved for the risk, the obvious question is how can you reduce the risk by redesigning the operation based on your data and learning.

Designing for safety is a multi-faceted problem with many unsolved issues. Airlines have constantly used data to improve safety in areas ranging from fatigue management to cybersecurity, which is reflected by the fact that 2023 was the safest year on record for airlines.

But as we step in to the realities of the cyberphysical world, with the range and power of emerging technological tools both as offensive and defensive options, the need for sensing, analysis, and redesign will only grow by an order of magnitude over the next decade, no matter what industry you’re in.

—

A big shout out to my colleague Will Straughan who worked with me on the research for this session.

AI Reading

This week we’re looking closely at the power of narrow AI agents that help you with specific tasks.

Vision: Sam Altman’s vision is one of an incredibly able assistant that knows everything about you and is there to help you at every step. It will be available beyond the chat interface as well. (MIT Tech Review)

Games? Or maybe they’ll be experts at Minecraft and other games. As companions, not assistants. (Tech Crunch)

Hiring - AI vs AI: what happens candidates use AI to write application, and hiring managers use AI to review them? The bot-apocalypse? (WSJ)

Sales and Programming Bots - that are humanised, and trained to work in business development or coding. Meet Alice your new sales assistant, or Devlin your new programmer. (WSJ)

Nursing Bots: nVidia and Hippocratic.ai somewhat controversially have announced a nursing bot that works at $9/hour (1/10 of the cost of a nurse). Although rather than a direct price comparison with real nurses, it would be more helpful to understand exactly which tasks the nursing bot does, so that the shortages in the nursing sector might be addressed. (QZ)

What About Sora? This piece looks at the potential impact of Sora on jobs. (FT)

Other Reading

Ofcom Children’s Code of Conduct: Ofcom has put in place measures to be implemented by tech companies which will allow for greater protection of children from harmful content especially on social media - including algorithmic changes, age verification, and complaints processes.

The 10x Test: An interesting argument that for any new technology to get mass adoption, it needs to be able make a specific task or set of tasks dramatically better. The original Macintosh had word-processing, early PCs had spreadsheets. Blackberry had email, and so on. The article suggests this is why Humane is still struggling - it doesn’t make any specific task much better. Is this the same thing as having a killer app? Perhaps.

Gene Therapies for Blindness: an experimental gene therapy restores partial vision for inherited blindness. The big question is the vacuum in the knowledge about long term implications. (CNN)

Fake Meat: why plant based burgers don’t need to be healthier than beef. Even if they’re just as unhealthy, their environmental impact is massive. The problem is politics. (MIT Tech Review)

About That Apple Advert …

Everybody is up in arms about this apple advert, and apple have actually apologised. The bone of contention seems to be that people’s sensibilities are hurt by the crushing of things we hold dear.

Some people have even suggested the advert would look much better in reverse, and there is something to that. Have a look…

I personally think that there’s a part of any creative or artistic endeavour that seeks to shock or startle. And Apple are no strangers to this (remember 1984?). In fact, whatever the outcome of this, Apple must be incredibly happy that the ad has become such a talking point because the controversy definitely helps spread the content wider and further.

thanks for reading

see you next week.