#204 The Story of Intelligence

The paths of natural and man made intelligence are intersecting as we speak. What happens next?

Note: This post got longer than I planned - so you may not get all of it in your email. Feel free to click through and read the rest!

How do you define intelligence?

When I asked my colleagues this question, I got a range of replies, from the broad - "the ability the adapt to your environment and evolve", to the specific - "the ability to read situations better than others", and from the descriptive "knowing more than the average + going beyond the obvious solutions" to the succinct - "power". The truth is, it's a term that we all use and grasp intuitively but we don't necessarily have a shared and articulated definition.

For me Intelligence is very simply the ability to process information. A higher intelligence is an ability to process more information in a given period of time. It implies that we have both receptors and processing power. People talk about EQ and IQ and all other kinds of intelligence. To me those are all representatives of the different types of information we need to process. EQ, for example, is the ability to process social and interpersonal information. It involves body language and non-verbal cues, and it also involves picking up and processing all the signals coming from other people - intended or unintended. But I'm getting ahead of myself. To understand the story of intelligence, let's go back to the beginning - say about 65 million years, for an early view of the evolution of intelligence.

How We Got Here

The dinosaurs who were admittedly of limited 'intelligence' were wiped out sometime around this point, and as new, smaller species evolved and dominated, they needed better eyesight and faster processing - even catching birds, insects, and smaller prey required a whole different speed of information processing - an early jump in brainpower was involved here. The next big jump appears to have been about 14m years ago with the evolution of apes - specifically one of the earliest social species. Arguably the birth of social intelligence, and communication. Then, about 2.5m years ago, there was a significant re-architecting of the skull. The jaw muscles loosened and the skull grew bigger. There's an alluring hypothesis about how this was caused by fire and cooking but sadly, this pre-dates fire by over a million years. This rearchitecture set hominids off on the path towards the evolution of the prefrontal cortex, which was further refined by the use of tools 2m years ago. And ultimately the arrival of fire, 800,000 years ago, did lead to further evolution of the brain as the jaw muscles enjoyed even more slack thanks to cooked food. This brings us to the era of the upright ape and many versions of hominids - such as homo sapiens, neanderthals, homo habilis, and others. According to Yuval Harari, the neanderthals actually had bigger individual brains but homo sapiens dominated because they had more social organisation. And so today, we, the homo sapiens, enjoy the fruits of this evolutionary path and the benefits of the prefrontal cortex which is the part of the brain unique to humans, and responsible for executive powers, decision making, resisting impulses, planning, and what we call consciousness.

Interestingly, the human body had to shrink to enable the brain to flourish because of the energy requirements of the brain. The human brain consumes almost 20% of our energy, despite accounting for some 2% of our mass. Which roughly equates to 20 watts of power. Which is quite an engineering feat, because to create a similar level of processing power we would need a computer the size of a building. On balance, nature is still far ahead when it comes to the engineering and hardware for information processing, although admittedly as we've seen, nature has a 65m year (at least!) head start.

(By the way here's how the maths breaks down: Human adults need (approx) 2000 calories per day. Which translates on a 1:4.2 ratio to 8400 kilo Joules. Which means 8400 x 1000 Joules of energy every day. One Joule per second is a Watt of power. There are 86400 seconds in a day. In So (10500 x 1000) / 86400 is the number of Joules we burn every second Which gives you roughly 100 watts - which is the rate at which humans burn energy. The brain consumes a fifth of that, which is 20 watts.)

What about intelligence created by humans?

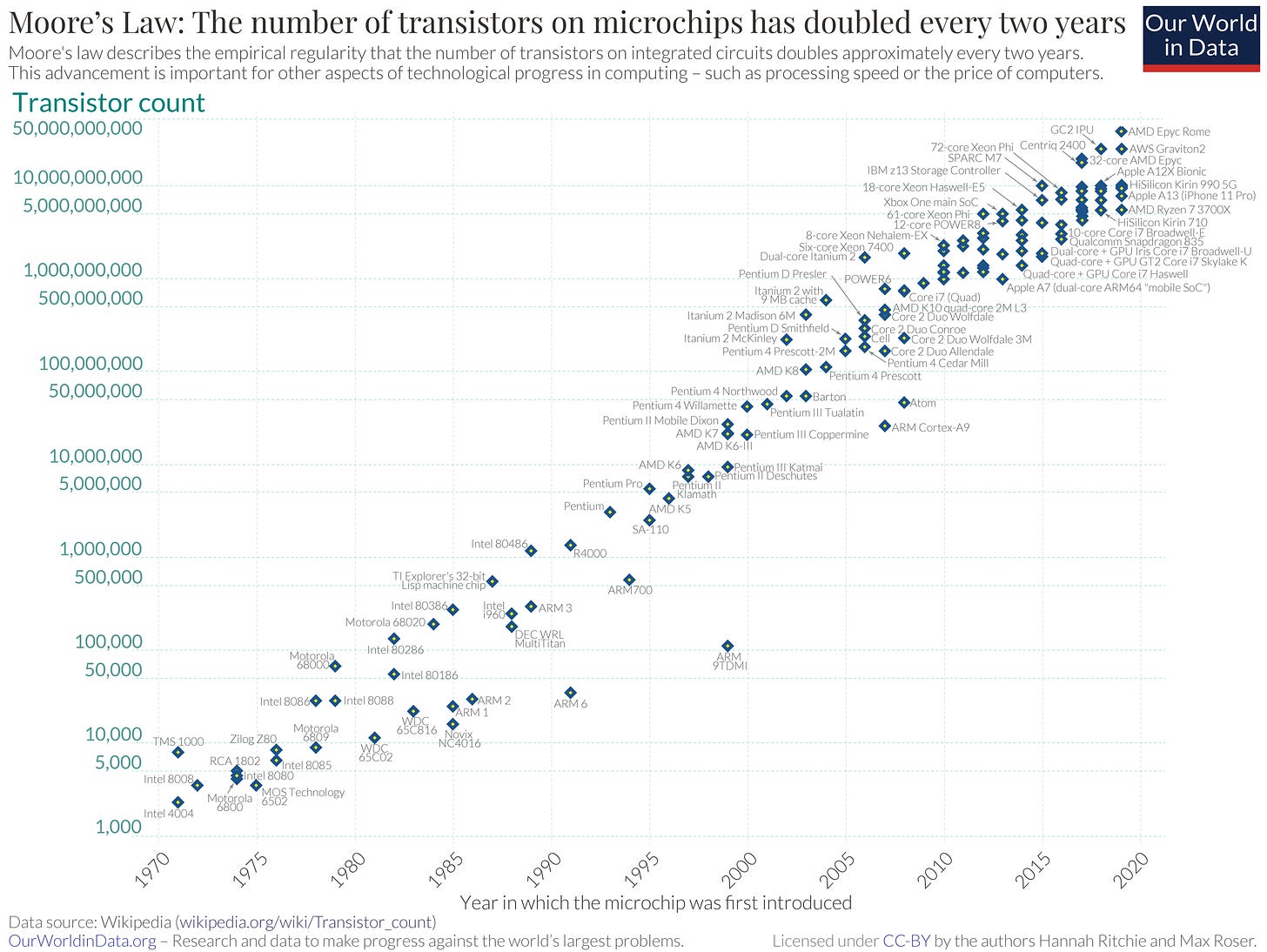

Let's look at the hardware first. The story starts with Charles Babbage who created the 'difference engine' in the mid 1800s, although it couldn't physically exist at the time. But he is credited with the conceptualisation of the programmable machine or the 'computer'. Alan Turing furthered the idea while trying to outwit the enigma machine, in the early 1940s. A logical architecture required for a machine to process information was designed by Von Neumann in 1945 - which showed the separation of input, memory, logic, control, and output - a model that is prevalent in today's computers. Even at this point the idea of industrial computing was executed via vacuum tubes. The IBM ENIAC had 17,000 of them. But shortly after, silicon entered the fray - thanks to the scientists at Bell Labs. The idea of a transistor - which allows electricity to flow in one direction is core to computers. Semiconductors - thus called because they allow electricity to flow under certain conditions but not others, is the other building block. Silicon became the preferred semiconducting material in the 1950s. Things started accelerating rapidly thereafter. From the early introduction of silicon in 1954, to the invention of Mosfet (Metal Oxide Silicon Field Effect Transistors) by Atalia and Dawon Kahng. Mosfets are used even today as the way transistors are built. Lithography was another leap forward - this allowed the etching of circuits onto wafers to enable tiny chips which could not be made by other means. The series of rapid advancements allowed Gordon Moore to put forward his famous law in 1965, though he himself didn't envisage it running on and on for 50 years. Along the way a vast number of marginal improvements and innovations have helped maintain this rate of hardware evolution.

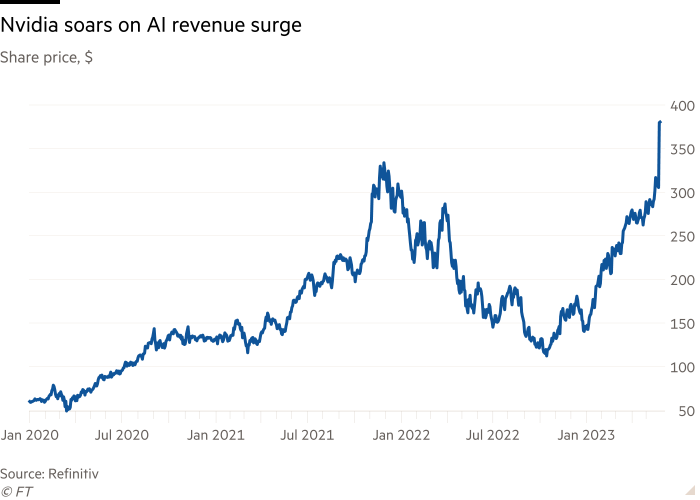

The evolution of transistors, circuits and integrated chips (ICs) have all contributed to our ability to build the hardware on which man made intelligence can flourish. Today we can produce wafers with 100 billion transistors (remember the largest wafers are 300 milimeters long!). Along the way, we have also seen the emergence of Graphics Processors, critical to image processing and subsequently AI. nVidia created GPUs in 1999, and has led the race since then. In 2022 nVidia created the H100 chip which, on the back of the generative AI hype has driven nVida's value to over a trillion dollars.

And What about the Software?

After all, some level of information processing has been inherent in basic software design. Alan Turing set us on the path in 1935 ( you could argue Ada Lovelace has an earlier claim, but her programs were written for the yet unbuilt engine of Charles Babbage, and were therefore theoretical). The ability to pre-code choices and allow the software to execute against specific options is what most automation and software has been about, over the past decades. But you wouldn't really call this intelligence, at best it’s a very low end version.

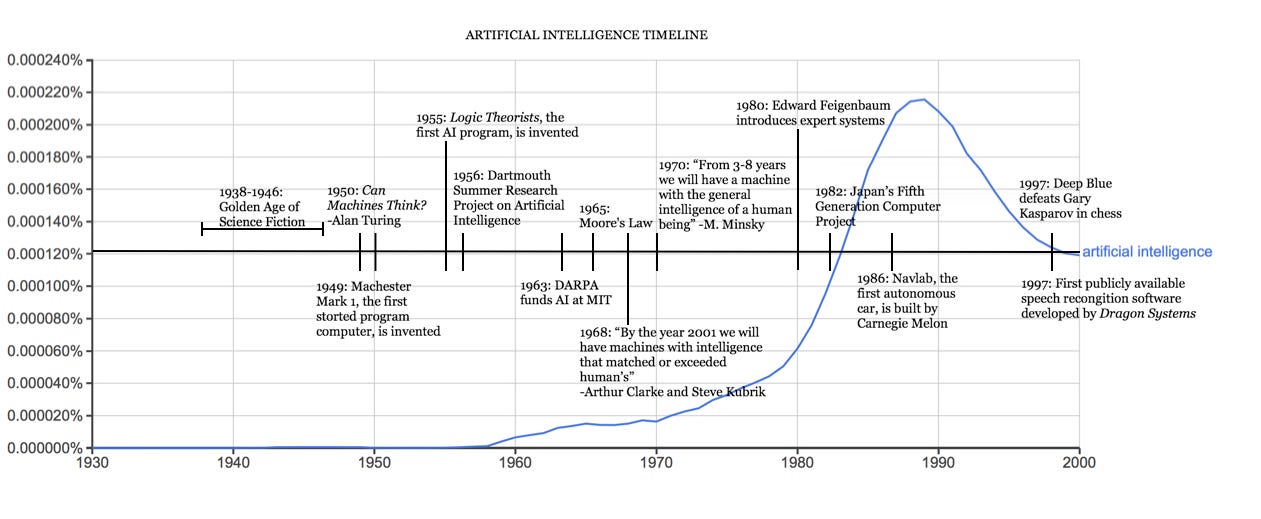

To true man-made intelligence, and in fact, to potentially understand the future of intelligence, we need once more to step back in history to the Dartmouth Summer Research Project of 1956 where the notion of Artificial Intelligence was first mooted. At the time it was more of a North Star statement rather any any kind of scientific parameter for measuring intelligence. Much like a mission to Mars might seem today, it was a the time a sufficiently distant dream so as to not brook any further reflection on what might happen once we got close.

The excitement of AI sparked frenetic activity and a lot of research over the next 20 years but by the mid 70s, it became apparent that computing power was a key bottleneck. Despite attempts such as Expert Systems, and the efforts of the Japanese government in the 80s, the results were simply not in the realm of the expectations and research funding dried up. We know this phase as the AI Winter. However the steady progress of algorithmic ability and computing power led to the emergence of IBM Watson and the famous chess match agains Kasparov.

3 things have accelerated the AI journey in the past 2 decades, as many including Kevin Kelly have posited. The evolution and growth of GPUs. The evolution of machine learning. And the availability of significant amounts of (big) data for training algorithms. Along the way a few milestones have been reached. The conquest of chess and Go - the defeat of Lee Sedol. The predicting of all protein structures. And the ability of AI to actually match and potentially transcend human capabilities in creative endeavours - including words and pictures. Going back to the vision established in 1956, now that we're actually nearing human level intelligence, this is now a real debate, as to what happens when AI gets close to humans.

Intelligence and Knowledge

With any smart system, as you process more and more information, the system may retain some or all of it. This retained information is what we call knowledge. It ranges from linguistic to mathematical, and from survival skills to high end professional knowledge. The knowledge we accrue is the by product (or perhaps the purpose) of the information we process. And conversely, the knowledge we have provides a basis for improved information processing - for example during our higher studies, or a work.

Here's a way to visualise intelligence (and knowledge) as we know it.

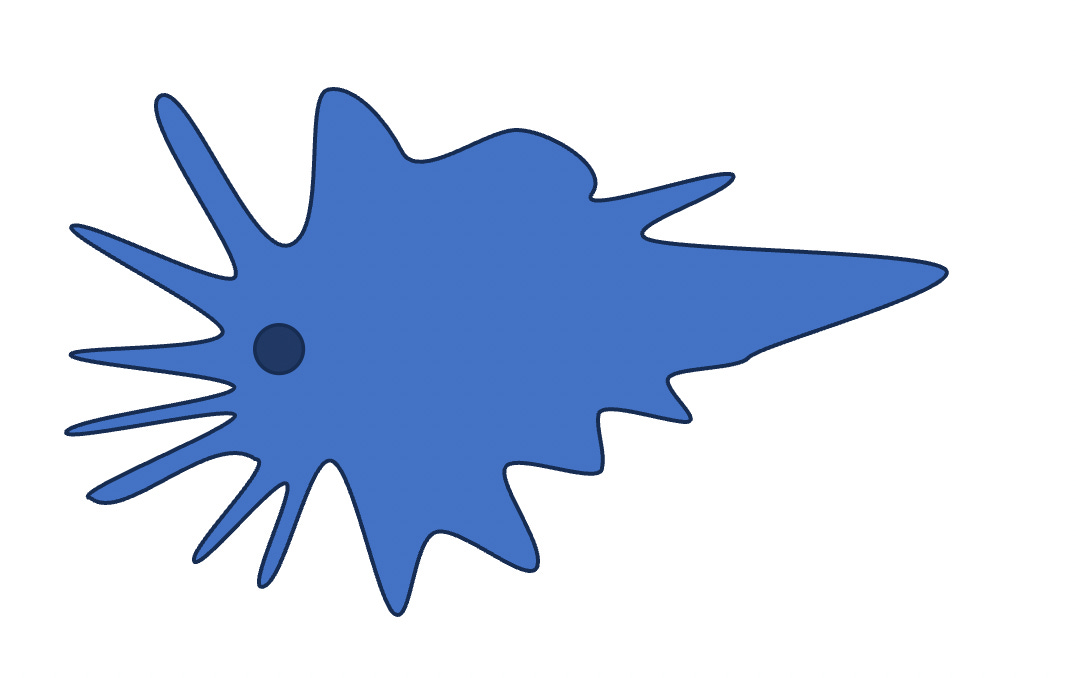

This is how we start out when we're born - as a dot.

Lots of potential but we know nothing, we're processing tons of information as a baby and that dot is growing fast, but there's a real chicken and egg between knowing stuff, and processing information that as humans we're able to bootstrap quite brilliantly. So the dot grows and the more we know often the more information we're able to process. At least it works that way until we know a lot and we somehow stop processing new information, especially when it counters what we think we know. We effectively at that point become less intelligent because our ability to process information arguably starts to shrink or become constrained.

But by that time, and as adults our intelligence and intellectual capability looks like this - there are areas of expertise where we know a fair bit, and plenty of other spikes - representing interest areas. My core area of knowledge is about technology and innovation. Apart from that, I know about football, and bits about impressionism, and certain types of music, but I don't know the first thing about wines, or Western classical music, or the Tudors. So my knowledge, like most of us, is spiky. To be honest the number things each of us know about is tiny compared to the vast amount of ignorance we have about most things. And that's before we get to things like cognitive biases! So our knowledge and information processing ability might look like this.

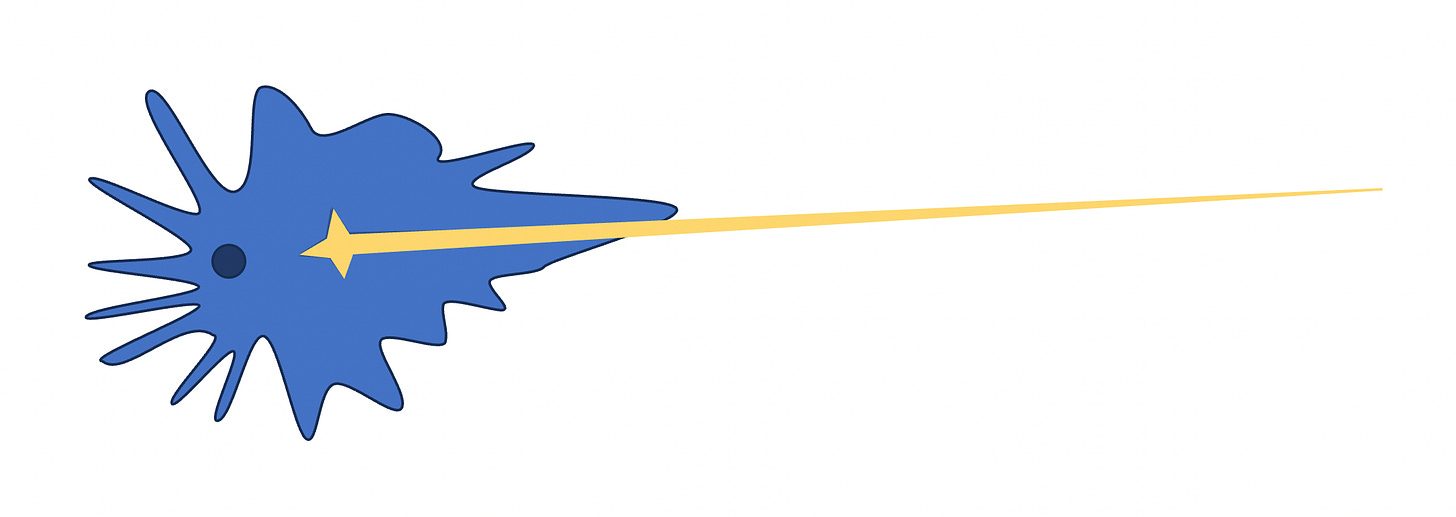

AI needs a lot of help to start with, much like human babies, so initially narrow AI can answer a few questions in specific areas, and invariably can't match a human expert. It’s the little yellow blob. But as we know that’s no reason to laugh at it because it’s learning at an alarming pace.

So given time… i.e. a few months rather than years…

…it can actually equal and then dramatically exceed human capabilities in very specific areas.

Remember that narrow AI (as the name suggests) can only do the one thing. It might be far better than humans at recognising cancer cells in a medical image, but it can't recognise a car, let alone start or drive it. It can't give you a recipe for banana bread or sort breeds of dogs. Each of these is a narrow AI skill, and you can train narrow AI to do any one of them, but at the cost of the others. But obviously a collection of such narrow tools gives us a level of superhuman ability already!

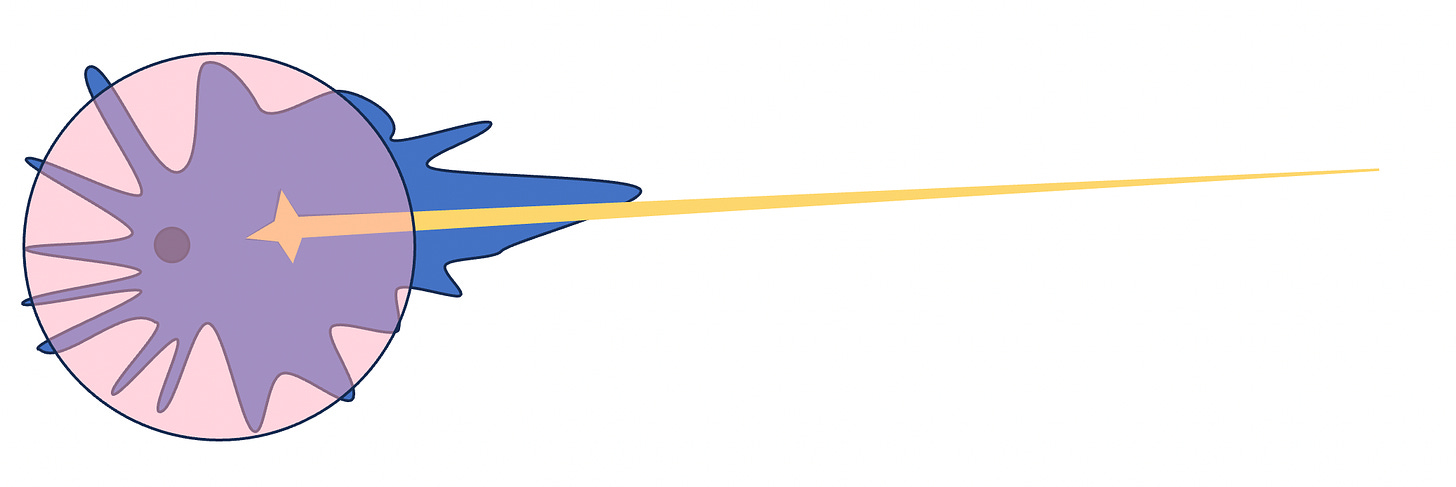

Generative AI on the other hand has a very different capability mix. Because of its focus on language and 'meaning', it's good at researching an area, or a question and returning plausible answers in a wide range of areas. Thereby significantly improving on the typical human spiky knowledge model, but often failing against trained humans in specific areas. That's today's picture.

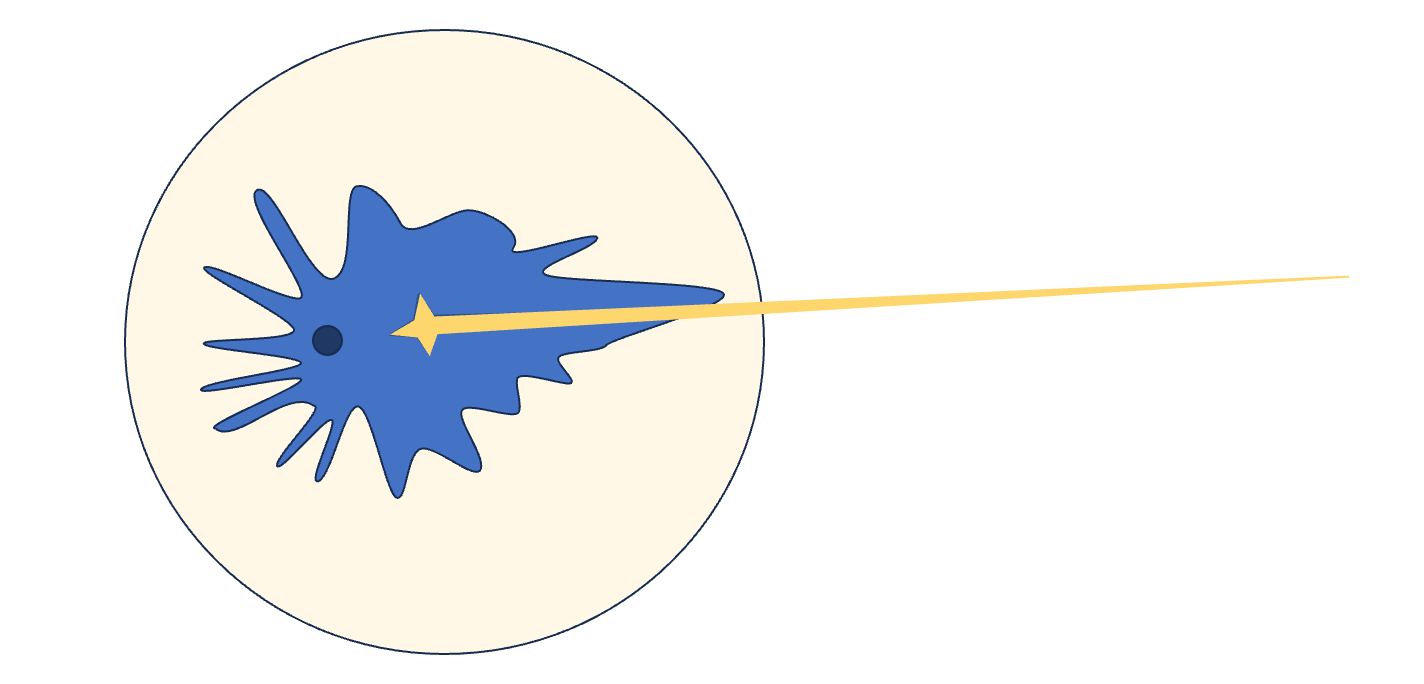

The genie that's out of the bottle is, of course, AI that can teach itself to improve, and it can keep doing so. This ultimately leads us to super intelligence, or you might call it AGI. Humans have the challenge that our knowledge is bounded by experiences and lifetimes, and then we have to start all over again. AI can theoretically keep improving. Here's how it might look in a few years - the AI is an improvement on humans for almost anything.

The thing is, there is no basis on which we can say 'that's enough' - there is no real reason for AI to stop getting better and better, so that it ultimately becomes far superior to humans in a number of ways. On this journey AI could design better underlying hardware for itself, improve its own algorithms, design better neural networks, and improve its own learning efficiency.

All of which means that we will at some point in the not-too-distant future, stop using humans as the benchmark of intelligence. We will need to evolve to a new yardstick and measure humans against that (and we’ll need a new Turing Test). Along the way we will end up re-designing hardware to resemble and hopefully mimic the human brain, and building the capability to tackle a host of complex problems. There is likely to be a continued anthropomorphic evolution as robotics and AI start to converge to give us humanoid intelligence. Artificial Neural Networks will be trained to work with human brains - accepting (and translating) partially processed information or passing semi-digested information back to human brains. At some point we may start to think of technology and AI as a living if not sentient thing. What would we do as a secondary intelligence when AI is smarter than us? More work? More play?

Perhaps the march to the future of intelligence will see us save the planet, reduce inequality, improve governance, and stop wars. One can only hope!

Further Reading:

Here’s a brief history timeline of AI (LiveScience)

Will AI generated art become a genre of its own? (Economist)

AI as a culture influencer - a snapchat girlfriend.

The impact of LLMs on labour markets (Open AI research)

A positive look at the impact of AI on art and creativity. (James Buckhouse on Medium)

Generative AI and white collar jobs (Azeem Azhar - Exponential View)

The Hollywood Writers’ strike and why it might be misguided (Scott Galloway)

The debate about AI and the transformation of Education (FT)

Other Reading

Healthcare: An informative and analytical view of the NHS: Given that GPs are paid just £160/yr per patient that broadly accounts for about £10 billion of the £160bn budget for NHS overall. The challenge seems to be management of a complex and under-structured entity. The ageing population exerts more pressure and the UK enjoys fewer beds per capita, or fewer CT scanners per capita than other rich countries, and lags in life expectancy. This piece argues that too much focus is on hospital based care and more needs to be done in virtual wards and community care. The creation of the 42 Integrated Care Systems will be a step in the right direction. (Economist)

Culture: Are we nostaligic for good old-fashioned capitalism? The latest slew of movies about corporate ambition and business myth-making (Air, Tetris, Blackberry - for example) would suggest so. (Quartz)

Education: is there a coming crisis in humanities education at colleges? Computer and information sciences surge as History and English fall (Washington Post)

Metaverse: is Apple launching a mixed reality headset? (Bloomberg)

Electric Vehicles: Today’s EV batteries cost $15,000 to make and involve a host of concerns from the price of Lithium to the human rights record of Cobalt. Could Sodium replace Lithium in EV batteries? Early examples by the end of this year? It all depends on how expensive Lithium continues to be, over its lifecycle. Plus, how far are we from swappable batteries? (MIT Technology Review)

Fusion Energy: why the hunt for perfect diamonds is holding back the fusion energy revolution (Medium)

Thanks for reading and see you soon!